The Qt 3D Render aspect allows for the rendering algorithm to be entirely data-driven. The controlling data structure is known as the framegraph . Similar to how the Qt 3D ECS (entity component system) allows you to define a so-called Scenegraph by building a scene from a tree of Entities and Components, the framegraph is also a tree structure but one used for a different purpose. Namely, controlling how the scene is rendered.

Over the course of rendering a single frame, a 3D renderer will likely change state many times. The number and nature of these state changes depends upon not only which materials (shaders, mesh geometry, textures and uniform variables) are found within the scene, but also upon which high level rendering scheme you are using.

For example, using a traditional simple forward rendering scheme is very different to using a deferred rendering approach. Other features such as reflections, shadows, multiple viewports, and early z-fill passes all change which states a renderer needs to set over the course of a frame and when those state changes need to occur.

As a comparison, the Qt Quick 2 scenegraph renderer responsible for drawing Qt Quick 2 scenes is hard-wired in C++ to do things like batching of primitives and rendering opaque items followed by rendering of transparent items. In the case of Qt Quick 2 that is perfectly fine as that covers all of the requirements. As you can see from some of the examples listed above, such a hard-wired renderer is not likely to be flexible enough for generic 3D scenes given the multitude of rendering methods available. Or if a renderer could be made flexible enough to cover all such cases, its performance would likely suffer from being too general. To make matters worse, more rendering methods are being researched all of the time. We therefore needed an approach that is both flexible and extensible whilst being simple to use and maintain. Enter the framegraph!

Each node in the framegraph defines a part of the configuration the renderer will use to render the scene. The position of a node in the framegraph tree determines when and where the subtree rooted at that node will be the active configuration in the rendering pipeline. As we will see later, the renderer traverses this tree in order to build up the state needed for your rendering algorithm at each point in the frame.

Obviously if you just want to render a simple cube onscreen you may think this is overkill. However, as soon as you want to start doing slightly more complex scenes this comes in handy. For the common cases, Qt 3D provides some example framegraphs that are ready to use out of the box.

We will demonstrate the flexibility of the framegraph concept by presenting a few examples and the resulting framegraphs.

Please note that unlike the Scenegraph which is composed of Entities and Components, the framegraph is only composed of nested nodes which are all subclasses of Qt3DRender::QFrameGraphNode . This is because the framegraph nodes are not simulated objects in our virtual world, but rather supporting information.

We will soon see how to construct our first simple framegraph but before that we will introduce the framegraph nodes available to you. Also as with the Scenegraph tree, the QML and C++ APIs are a 1 to 1 match so you can favor the one you like best. For the sake of readability and conciseness, the QML API was chosen for this article.

The beauty of the framegraph is that combining these simple node types, it is possible to configure the renderer to suit your specific needs without touching any hairy, low-level C/C++ rendering code at all.

In order to construct a correctly functioning framegraph tree, you should know a few rules about how it is traversed and how to feed it to the Qt 3D renderer.

The FrameGraph tree should be assigned to the activeFrameGraph property of a QRenderSettings component, itself being a component of the root entity in the Qt 3D scene. This is what makes it the active framegraph for the renderer. Of course, since this is a QML property binding, the active framegraph (or parts of it) can be changed on the fly at runtime. For example, if you want to use different rendering approaches for indoor and outdoor scenes or to enable or disable some special effect.

Entity {

id: sceneRoot

components: RenderSettings {

activeFrameGraph: ... // FrameGraph tree

}

}

注意: activeFrameGraph is the default property of the FrameGraph component in QML.

Entity {

id: sceneRoot

components: RenderSettings {

... // FrameGraph tree

}

}

At its heart, the framegraph is a data-driven method for configuring the Qt 3D renderer. Due to its data-driven nature, we can change configuration at runtime, allow non-C++ developers or designers to change the structure of a frame, and try out new rendering approaches without having to write thousands of lines of boiler plate code.

Now that you know the rules to abide by when writing a framegraph tree, we will go over a few examples and break them down.

Forward rendering is when you use OpenGL in its traditional manner and render directly to the backbuffer one object at a time shading each one as we go. This is opposed to deferred rendering where we render to an intermediate G-buffer . Here is a simple FrameGraph that can be used for forward rendering:

Viewport {

normalizedRect: Qt.rect(0.0, 0.0, 1.0, 1.0)

property alias camera: cameraSelector.camera

ClearBuffers {

buffers: ClearBuffers.ColorDepthBuffer

CameraSelector {

id: cameraSelector

}

}

}

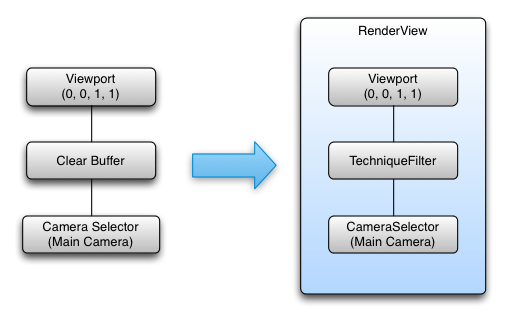

As you can see, this tree has a single leaf and is composed of 3 nodes in total as shown in the following diagram.

Using the rules defined above , this framegraph tree yields a single RenderView with the following configuration:

Several different FrameGraph trees can produce the same rendering result. As long as the state collected from leaf to root is the same, the result will also be the same. It is best to put state that remains constant longest nearer to the root of the framegraph as this will result in fewer leaf nodes, and hence, fewer RenderViews overall.

Viewport {

normalizedRect: Qt.rect(0.0, 0.0, 1.0, 1.0)

property alias camera: cameraSelector.camera

CameraSelector {

id: cameraSelector

ClearBuffers {

buffers: ClearBuffers.ColorDepthBuffer

}

}

}

CameraSelector {

Viewport {

normalizedRect: Qt.rect(0.0, 0.0, 1.0, 1.0)

ClearBuffers {

buffers: ClearBuffers.ColorDepthBuffer

}

}

}

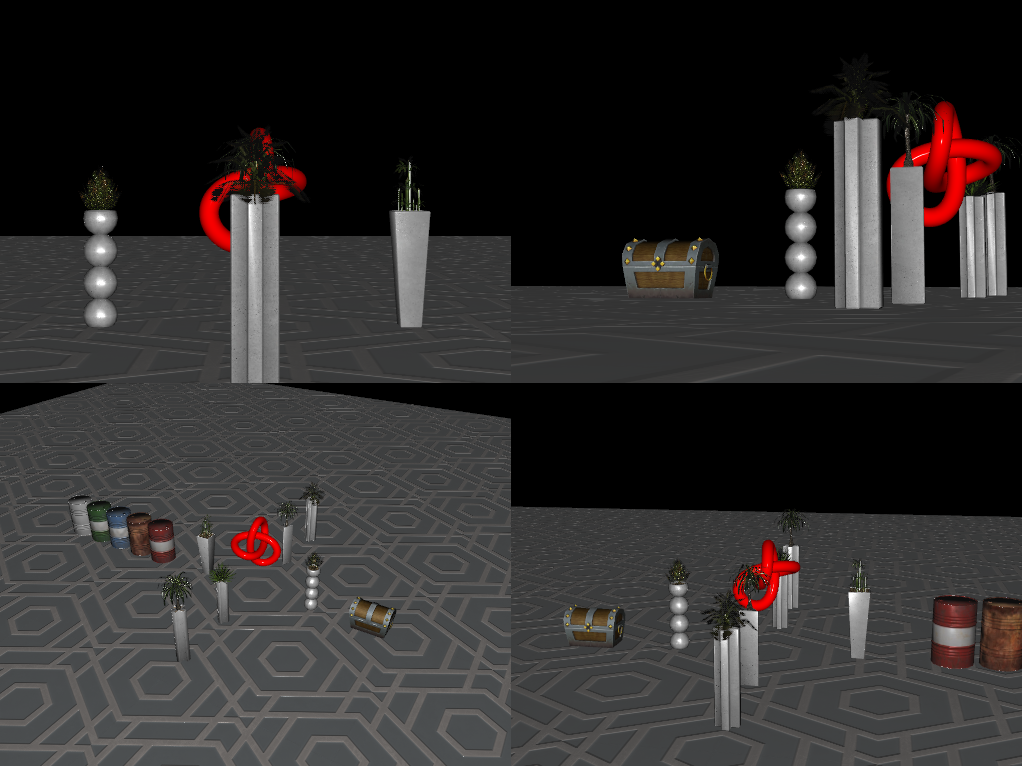

Let us move on to a slightly more complex example that renders a Scenegraph from the point of view of 4 virtual cameras into the 4 quadrants of the window. This is a common configuration for 3D CAD or modelling tools or could be adjusted to help with rendering a rear-view mirror in a car racing game or a CCTV camera display.

Viewport {

id: mainViewport

normalizedRect: Qt.rect(0, 0, 1, 1)

property alias Camera: cameraSelectorTopLeftViewport.camera

property alias Camera: cameraSelectorTopRightViewport.camera

property alias Camera: cameraSelectorBottomLeftViewport.camera

property alias Camera: cameraSelectorBottomRightViewport.camera

ClearBuffers {

buffers: ClearBuffers.ColorDepthBuffer

}

Viewport {

id: topLeftViewport

normalizedRect: Qt.rect(0, 0, 0.5, 0.5)

CameraSelector { id: cameraSelectorTopLeftViewport }

}

Viewport {

id: topRightViewport

normalizedRect: Qt.rect(0.5, 0, 0.5, 0.5)

CameraSelector { id: cameraSelectorTopRightViewport }

}

Viewport {

id: bottomLeftViewport

normalizedRect: Qt.rect(0, 0.5, 0.5, 0.5)

CameraSelector { id: cameraSelectorBottomLeftViewport }

}

Viewport {

id: bottomRightViewport

normalizedRect: Qt.rect(0.5, 0.5, 0.5, 0.5)

CameraSelector { id: cameraSelectorBottomRightViewport }

}

}

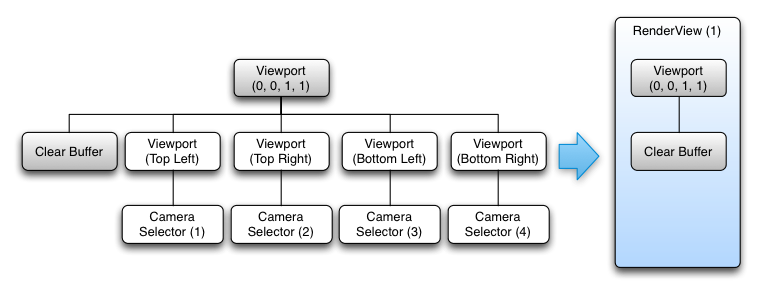

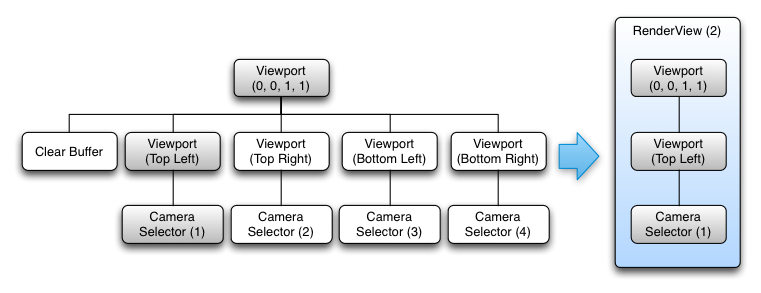

This tree is a bit more complex with 5 leaves. Following the same rules as before we construct 5 RenderView objects from the FrameGraph. The following diagrams show the construction for the first two RenderViews. The remaining RenderViews are very similar to the second diagram just with the other sub-trees.

In full, the RenderViews created are:

However, in this case the order is important 。若 ClearBuffers node were to be the last instead of the first, this would result in a black screen for the simple reason that everything would be cleared right after having been so carefully rendered. For a similar reason, it could not be used as the root of the FrameGraph as that would result in a call to clear the whole screen for each of our viewports.

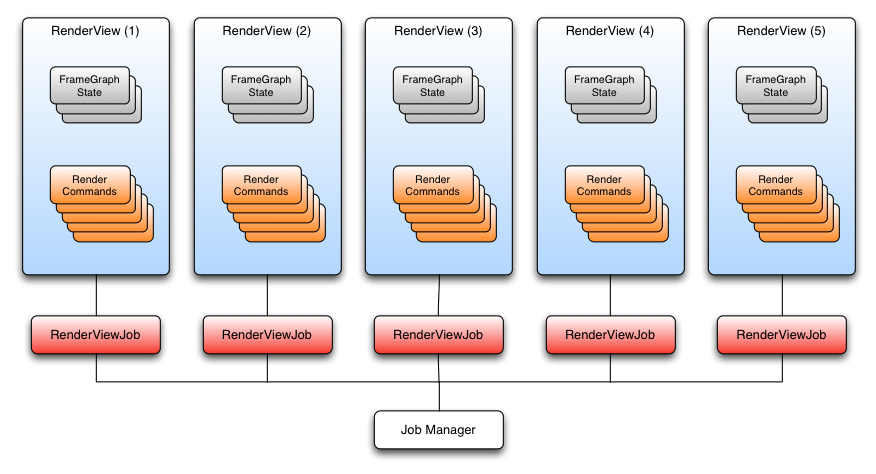

Although the declaration order of the FrameGraph is important, Qt 3D is able to process each RenderView in parallel as each RenderView is independent of the others for the purposes of generating a set of RenderCommands to be submitted whilst the RenderView's state is in effect.

Qt 3D uses a task-based approach to parallelism which naturally scales up with the number of available cores. This is shown in the following diagram for the previous example.

The RenderCommands for the RenderViews can be generated in parallel across many cores, and as long as we take care to submit the RenderViews in the correct order on the dedicated OpenGL submission thread, the resulting scene will be rendered correctly.

When it comes to rendering, deferred rendering is a different beast in terms of renderer configuration compared to forward rendering. Instead of drawing each mesh and applying a shader effect to shade it, deferred rendering adopts a two render pass 方法。

First all the meshes in the scene are drawn using the same shader that will output, usually for each fragment, at least four values:

Each of these values will be stored in a texture. The normal, color, depth, and position textures form what is called the G-Buffer. Nothing is drawn onscreen during the first pass, but rather drawn into the G-Buffer ready for later use.

Once all the meshes have been drawn, the G-Buffer is filled with all the meshes that can currently be seen by the camera. The second render pass is then used to render the scene to the back buffer with the final color shading by reading the normal, color, and position values from the G-buffer textures and outputting a color onto a full screen quad.

The advantage of that technique is that the heavy computing power required for complex effects is only used during the second pass only on the elements that are actually being seen by the camera. The first pass does not cost much processing power as every mesh is being drawn with a simple shader. Deferred rendering, therefore, decouples shading and lighting from the number of objects in a scene and instead couples it to the resolution of the screen (and G-Buffer). This is a technique that has been used in many games due to the ability to use large numbers of dynamic lights at the expense of additional GPU memory usage.

Viewport { id: root normalizedRect: Qt.rect(0.0, 0.0, 1.0, 1.0) property GBuffer gBuffer property alias camera: sceneCameraSelector.camera property alias sceneLayer: sceneLayerFilter.layers property alias screenQuadLayer: screenQuadLayerFilter.layers RenderSurfaceSelector { CameraSelector { id: sceneCameraSelector // Fill G-Buffer LayerFilter { id: sceneLayerFilter RenderTargetSelector { id: gBufferTargetSelector target: gBuffer ClearBuffers { buffers: ClearBuffers.ColorDepthBuffer RenderPassFilter { id: geometryPass matchAny: FilterKey { name: "pass" value: "geometry" } } } } } TechniqueFilter { parameters: [ Parameter { name: "color"; value: gBuffer.color }, Parameter { name: "position"; value: gBuffer.position }, Parameter { name: "normal"; value: gBuffer.normal }, Parameter { name: "depth"; value: gBuffer.depth } ] RenderStateSet { // Render FullScreen Quad renderStates: [ BlendEquation { blendFunction: BlendEquation.Add }, BlendEquationArguments { sourceRgb: BlendEquationArguments.SourceAlpha destinationRgb: BlendEquationArguments.DestinationColor } ] LayerFilter { id: screenQuadLayerFilter ClearBuffers { buffers: ClearBuffers.ColorDepthBuffer RenderPassFilter { matchAny: FilterKey { name: "pass" value: "final" } parameters: Parameter { name: "winSize" value: Qt.size(1024, 768) } } } } } } } } }

(Above code is adapted from qt3d/tests/manual/deferred-renderer-qml )。

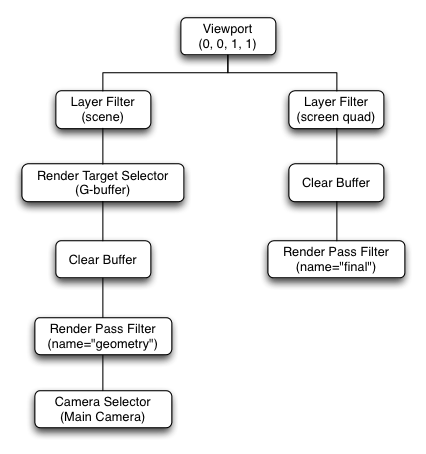

Graphically, the resulting framegraph looks like:

And the resulting RenderViews are:

gBuffer

as the active render target

gBuffer

)

Since the FrameGraph tree is entirely data-driven and can be modified dynamically at runtime, you can:

We have introduced the FrameGraph and the node types that compose it. We then went on to discuss a few examples to illustrate the framegraph building rules and how the Qt 3D engine uses the framegraph behind the scenes. By now you should have a pretty good overview of the FrameGraph and how it can be used (perhaps to add an early z-fill pass to a forward renderer). Also you should always keep in mind that the FrameGraph is a tool for you to use so that you are not tied down to the provided renderer and materials that Qt 3D provides out of the box.